In this article, I am going to show you how to install an HPC cluster in virtual machines on your own computer. I focus on a minimalistic setup, with a couple of virtual machines connected to a shared storage. I will not show you how to install a cluster management system, nor will I show you how to setup and use a job scheduler. The main idea behind this article is to present the most straightforward and cost-effective way to get into supercomputer programming. For example, it can be a good platform to prototype and develop code that will eventually run on an HPC cluster. In fact, having your own virtual cluster allows you to test your programs without running an interactive session, and there is no overhead caused by the job scheduler: no need to submit a job to run the program, no need to wait for the program to even start.

Requirements

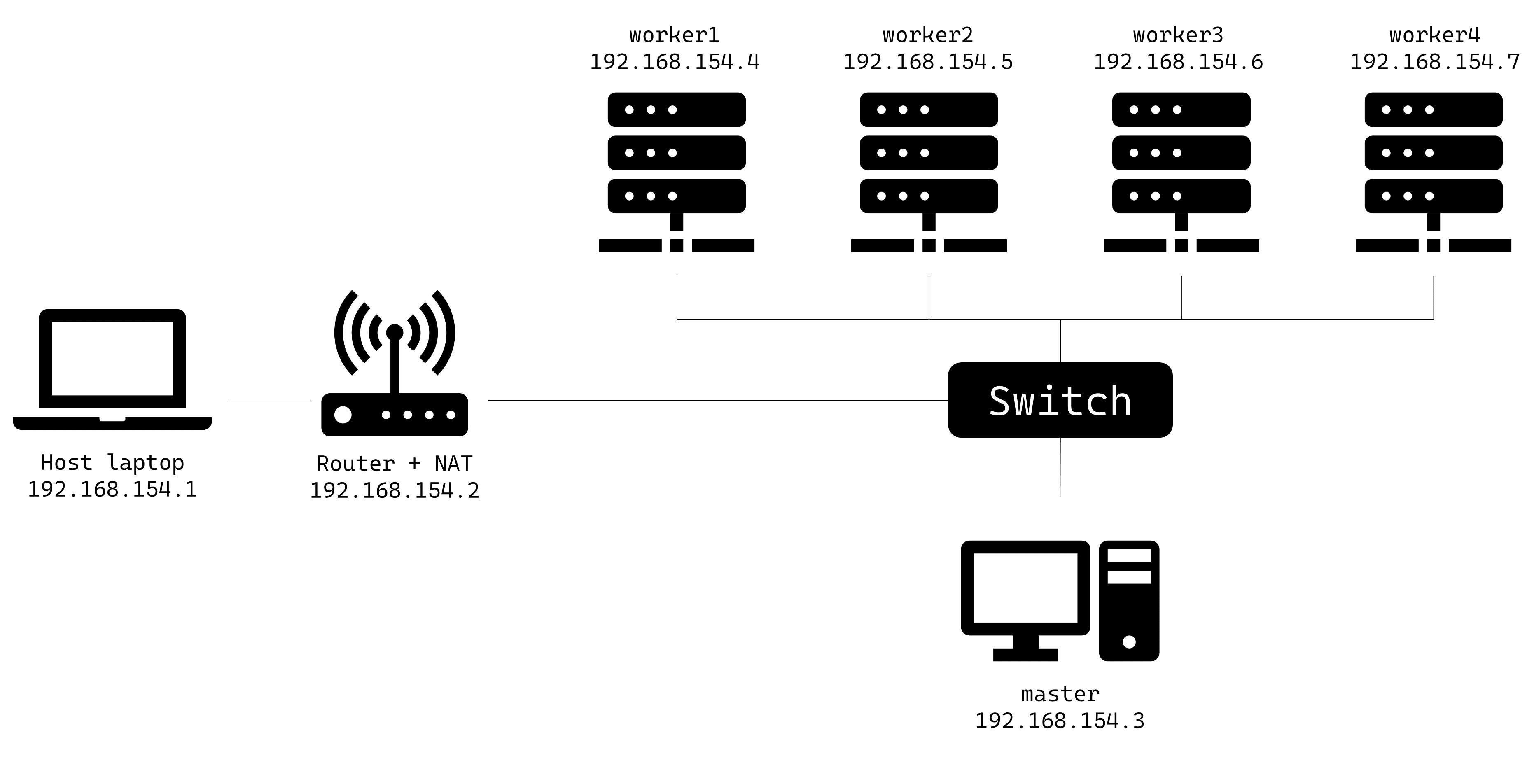

Regarding the hardware I am using, it is a laptop with 8 cores with Hyper-Threading and 32 GB of RAM. I am running one master node and 4 compute nodes, all of which are equipped with 2 cores and 4 GB of RAM. Although you can use a different software stack, throughout the article I will use the following software:

- Windows 10 for the host OS

- VMware Workstation Pro 16 for virtualization

- Ubuntu Server 20.04 for the master and compute nodes

- MPICH2 for message passing

- NFS for shared storage

System diagram

Installation of the master node

Base installation

The first step is to install Ubuntu Server LTS on a virtual machine. Note that in this article, my username is mgaillard, you can of course change it to anything else. Following are the necessary packages for the master node:

# Install relevant packages for the master node

$ sudo apt install cmake git build-essential nfs-kernel-server nfs-common

Network configuration

Once the VM is installed, we need to configure its hostname and static IP address.

# Change the hostname to "master"

$ sudo nano /etc/hostname

# Assign a static IP address to the master node

$ sudo nano /etc/netplan/00-installer-config.yaml

# network:

# ethernets:

# ens33:

# dhcp4: no

# addresses:

# - 192.168.154.3/24

# gateway4: 192.168.154.2

# nameservers:

# addresses: [192.168.154.2]

# version: 2

To ease communication with other nodes, we add their names to the hosts file. Change the /etc/hosts files with the command sudo nano /etc/hosts, and add the following lines at the beginning.

127.0.0.1 localhost

# MPI cluster setup

192.168.154.3 master

192.168.154.4 worker1

192.168.154.5 worker2

192.168.154.6 worker3

192.168.154.7 worker4

Install MPICH

MPICH is an implementation of the Message Passing Interface (MPI) standard. It is the library that is used by programs running on the HPC cluster to communicate between compute nodes. Note that another popular MPI library is OpenMPI. Following are the commands to download, compile, and install MPICH on Ubuntu.

# Download MPICH

$ wget https://www.mpich.org/static/downloads/4.0/mpich-4.0.tar.gz

$ tar xfz mpich-4.0.tar.gz

$ rm mpich-4.0.tar.gz

# Compile MPICH

$ cd mpich-4.0

$ ./configure --disable-fortran

$ make

$ sudo make install

# Check installation

$ mpiexec --version

Configuration of the NFS server

For the cluster to be able to execute the program in a distributed on multiple nodes simultaneously, it needs to be accessible from all nodes. For this, the simplest way is to setup a NFS share on the master node that is mounted on compute nodes.

# Create the shared directory on the master node

$ cd ~

$ mkdir cloud

# Add an entry to the /etc/exports file:

# /home/mgaillard/cloud *(rw,sync,no_root_squash,no_subtree_check)

$ echo "/home/mgaillard/cloud *(rw,sync,no_root_squash,no_subtree_check)" | sudo tee -a /etc/exports

$ sudo exportfs -ra

$ sudo mount -a

$ sudo service nfs-kernel-server restart

Installation of the worker nodes

Base installation

The first step is to install Ubuntu Server LTS on a virtual machine. To save time it is also possible to directly clone the master VM. In this case, remember to turn off the master node before booting the worker VM because they would both have the same IP address, which generates conflicts. Following are the necessary packages for the worker nodes:

# Install relevant packages for the worker nodes

$ sudo apt install cmake git build-essential nfs-common

Network configuration

Follow the same instructions as for the master node. Change the hostname and IP address based on the worker:

- worker1: 192.168.154.4

- worker2: 192.168.154.5

- worker3: 192.168.154.6

- worker4: 192.168.154.7

Change the /etc/hosts files with the command sudo nano /etc/hosts, and add the same lines as in the master node at the beginning of the file.

127.0.0.1 localhost

# MPI cluster setup

192.168.154.3 master

192.168.154.4 worker1

192.168.154.5 worker2

192.168.154.6 worker3

192.168.154.7 worker4

Install MPICH

To install MPICH on the worker nodes, follow the same instructions as for the master node.

Configuration of the NFS client

# Create the shared directory on the master node

$ cd ~

$ mkdir cloud

# On the workers, mount the shared directory located on the master node

$ sudo mount -t nfs master:/home/mgaillard/cloud ~/cloud

# Check mounted directories

$ df -h

# Make the mounts permanent, add the entry to the /etc/fstab file

$ cat /etc/fstab

# MPI cluster setup

master:/home/mgaillard/cloud home/mgaillard/cloud nfs

Cluster all nodes together

To make sure all nodes can seamlessly communicate together, we need to generate and copy SSH keys between them. On each node, run the following commands.

$ ssh-keygen -t ed25519

# Copy to SSH key to all other nodes (except the current node)

$ ssh-copy-id master

$ ssh-copy-id worker1

$ ssh-copy-id worker2

$ ssh-copy-id worker3

$ ssh-copy-id worker4

# For passwordless SSH

$ eval "$(ssh-agent -s)"

$ ssh-add ~/.ssh/id_ed25519

Use the HPC cluster

In this section, we will write a simple Hello World program, compile it and run it on the HPC cluster. Create the file main.cpp in the shared directory and copy paste this program:

#include <mpi.h>

#include <stdio.h>

int main(int argc, char** argv)

{

// Initialize the MPI environment

MPI_Init(NULL, NULL);

// Get the number of processes

int world_size;

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

// Get the rank of the process

int world_rank;

MPI_Comm_rank(MPI_COMM_WORLD, &world_rank);

// Get the name of the processor

char processor_name[MPI_MAX_PROCESSOR_NAME];

int name_len;

MPI_Get_processor_name(processor_name, &name_len);

// Print off a hello world message

printf("Hello world from processor %s, rank %d out of %d processors\n",

processor_name, world_rank, world_size);

// Finalize the MPI environment.

MPI_Finalize();

return 0;

}

To ease compilation of C++ programs, I like to use CMake. Here is the CMakeLists.txt to compile the Hello World program:

cmake_minimum_required(VERSION 3.16.0)

project(MpiHelloWorld LANGUAGES CXX)

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

# Activate OpenMP

find_package(OpenMP REQUIRED)

# Activate MPI

find_package(MPI REQUIRED)

add_executable(MpiHelloWorld)

target_sources(MpiHelloWorld

PRIVATE

main.cpp

)

target_link_libraries(MpiHelloWorld

PRIVATE

OpenMP::OpenMP_CXX

MPI::MPI_CXX

)

Finally, to compile and run the Hello World program, use the following commands:

# Create the build folder

$ mkdir build && cd build

# Compile using CMake

$ cmake -DCMAKE_BUILD_TYPE=Release ..

$ cmake --build .

# Run the program on 4 nodes

$ mpirun -n 4 --hosts worker1,worker2,worker3,worker4 ./MpiHelloWorld

You should get a result similar to this:

Hello world from processor worker1, rank 0 out of 4 processors

Hello world from processor worker3, rank 2 out of 4 processors

Hello world from processor worker2, rank 1 out of 4 processors

Hello world from processor worker4, rank 3 out of 4 processors

References

The website MPI Tutorial has two relevant articles about creating a HPC cluster:

I found another older yet relevant article on this website: