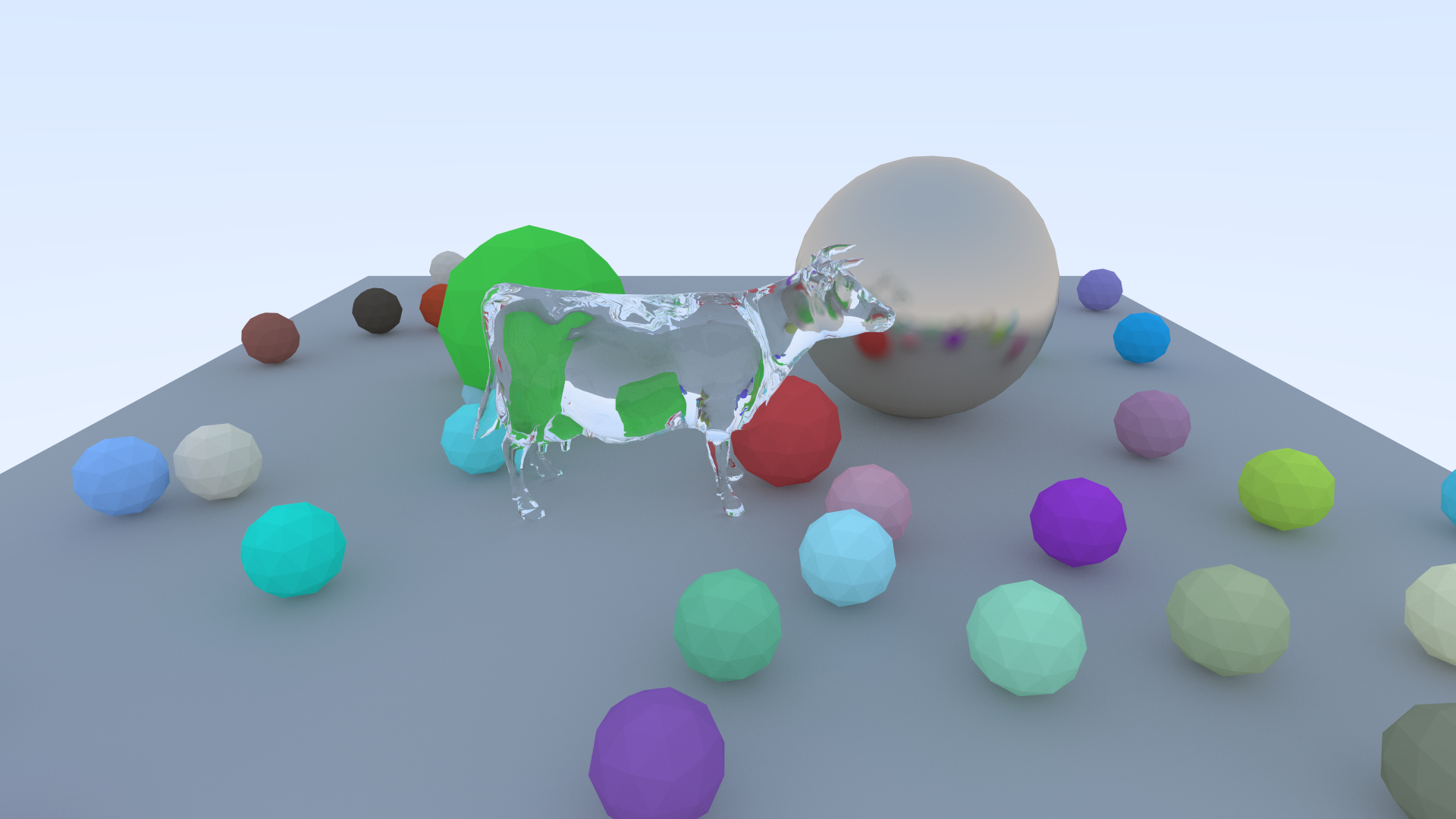

Here is the result of my own implementation of the book Ray Tracing in One Weekend.

The main difference between my implementation and the one presented in the book is that I use triangular meshes instead of just perfect spheres. As a consequence, I can show much more complicated objects, but the drawback is that it takes a very long time to render because I didn’t implement any optimized data structure to intersect rays with triangles. This image is full HD with 1024 samples per pixels and rendering took 15 minutes on two 64 cores AMD EPYC Rome processors.

Simple AABB acceleration (edit on 10/09/2022)

I wasn’t really satisfied with the performances of the ray tracing code. Originally the rendering took half a day on a Xeon Phi 7210 with 64 cores at 1,30 GHz. So, I decided to implement a simplistic spatial data structure acceleration. For each triangle mesh in the 3D scene, I compute its axis-aligned bounding box (AABB). During rendering, before intersecting any triangle of a mesh, the ray tracer will first check if the ray intersects the AABB of the mesh. This very simple trick achieved a 5.7 times speedup in rendering.

Fixed camera model (edit on 10/11/2022)

I noticed there was a bug in the camera model, and the aspect ratio of the generated image did not match the resolution in pixels. As a result, spheres would appear as ellipsoids on the image. For reference, here is the original version of the image: Image with camera bug.

Distributed implementation with MPI (edit on 10/24/2022)

To further improve the rendering speed, I distributed the rendering code so that it works on a supercomputer. The same scene is first generated by all compute nodes. Then during the rendering, samples are distributed among all nodes. For example, if we want to render an image with 1024 samples per pixel with two compute nodes, each node will render 512 samples per pixels. Finally, all samples are gathered on the master node and the image is gamma corrected. One very important thing to consider in this scenario is that random seeds need to be different on all nodes, otherwise the same image will be generated by all nodes. To check scalability, I rendered the same image on one versus two nodes of the Bell supercomputer at Purdue University. On one node, I simply use the OpenMP version with 128 cores (two CPUs with 64 cores each). On two nodes, I distributed a total of 16 instances over the two nodes, each of which with 16 cores. I did this to split the computation per NUMA node so that each of the 16 instances share the same L3 cache and have uniform memory accesses. The performace on 256 cores doubled versus on 128 cores!

More information

Link to the GitHub repository: mgaillard/Renderer

Link to the online book: Ray Tracing in One Weekend